Simulating a Portfolio

We need to keep track of the performances of our trader. And more importantly, understand how to reward our trader for making good trade decisions.

Any good reinforcement learning system depends on how good the reward function is.

In the Mario example, the rewards are very clear: do eat coins, don’t jump off cliffs; avoid monsters, make it to the finish line.

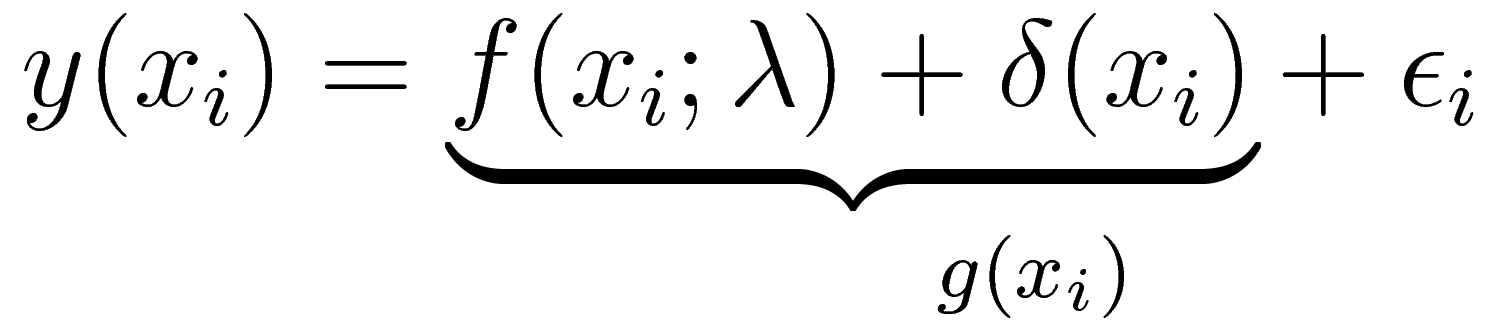

However, in cryptocurrency trading, the reward function requires a lot more thought. Most previous ML-based methods use instantaneous reward, such as daily profit. Daily profit is usually calculated in additive  or productive form

or productive form  .

.

The instantaneous reward is often too noisy for learning, and it is not consistent with our goal of maximizing long term profit. We ended up basing our reward based on accumulated wealth over n days in the past.

Our problem formulation is heavily influenced by Deep-Q-Trading by an algorithmic group at TsingHua University.

Our portfolio class also keeps track of the number of coins and cash in our portfolio, as well as how well we are doing compared with the past.

For more, see our implementation here.